I. Introduction

In recent years, systems featuring automatically marked online programming exercises have become increasingly common. These automated systems generate large amounts of data that can be mined for insight on student programming behavior and to make predictions on student performance.

Predictors of student performance based on programming exercises have become increasingly sophisticated. Jadud’s early work focused on the use of syntax errors and found correlations between term marks and programming errors. Watson et al. extended these ideas by incorporating the time spent solving problems, increasing their predictive power. Most recently, Carter et al. reported on a heuristic that accounts for some semantic correctness. They claim that a combination of programming practices and errors at the IDE level can be used to detect struggling students in introductory level computer science courses earlier on. All of these measures were applied to relatively large programming assignments.

In contrast, earlier this year, Spacco et al. investigated participation and performance data from short, web-based programming exercises and reported correlations between performance on these exercises and overall performance in the course. Similarly, we investigated short, online exercises, but instead of focusing on participation, we considered the number of attempts required to complete problems successfully.

II. Approach

Our data set includes exercises completed by 368 students in a first year programming course at a research-intensive university. Students who did not complete the course (including the exam) or who scored below 30% (indicating a lack of engagement in the course) were removed. The course is taught in Python and features content ranging from variable assignment to loops and module-level design. It attracts students from a wide range of ability levels, including students with no prior programming experience. While the course is a pre-requisite for many higher-level computer science courses, it also attracts students completing electives. The course features a midterm and a final exam. In addition to these assessments, students also complete 42 coding and multiple-choice questions spread across weekly problem sets and submit three longer assignments that are not completed online.

We analyzed the multiple choice questions by calculating the number of questions a student completed under the average amount of attempts required. Spacco et al. reported that in the majority of computer science courses they investigated, there was a statistically significant (p-value < 0.05) relationship between a student’s final exam mark and the number of questions attempted as well as the number of questions completed correctly. However, in our data set, students were allowed to retry questions until they got a correct answer, so, unlike Spacco et al.’s study, most students were able to submit a correct answer to every attempted question. As a result, we used the average number of attempts to identify students with a stronger understanding of the concepts being evaluated.

We analyzed the coding exercises by using linear regression to identify the programming exercises with the strongest relationship with exam performance. Our analysis identified six exercises (of twenty) with a strong correlation to final exam marks, and a review of these six exercises confirms that they are more directly comparable to questions on the midterm and exam in terms of difficulty and content coverage.

We combine these metrics to predict student performance. Our metric combines the number of multiple-choice questions completed within the average number of attempts and correctness on the six coding questions to generate a prediction score.

III. Results and Analysis

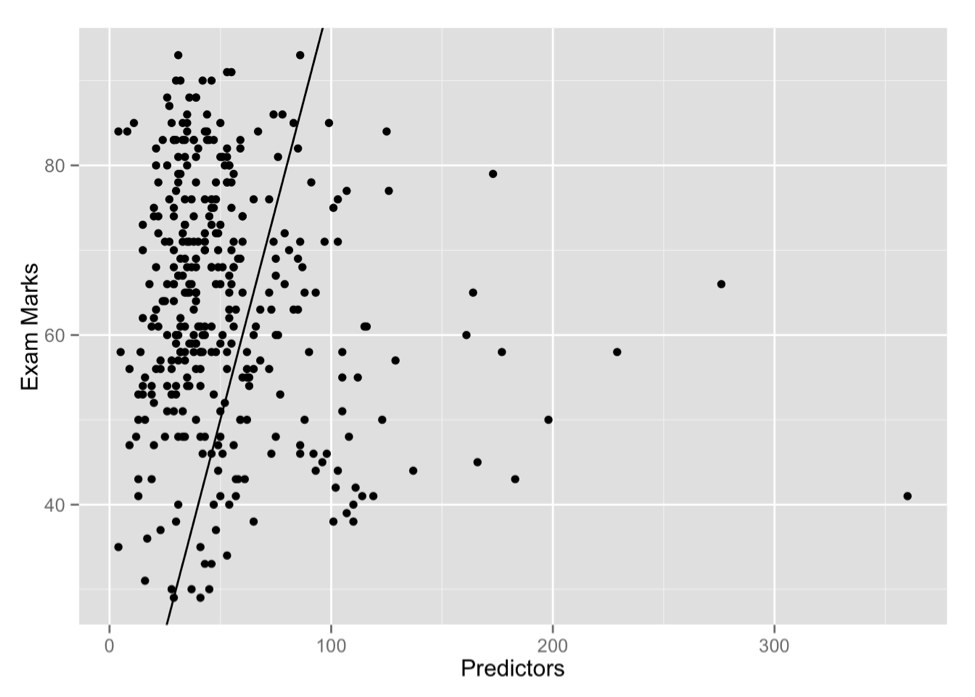

Overall, a weak correlation – comparable to those found by Watson et al. – exists between a student’s prediction score and final exam mark. The results are significant (p-value < 0.001), with an adjusted R-squared value of 0.2956. The F-statistic is 21.16 on 7 and 353 degrees of freedom.

The questions evaluated are similar in structure and content to those on the final exam. Furthermore, they are assessments, as they were evaluated after content was taught and practiced. This suggests that performance on individual, unsupervised exercises in a low-stress environment can be predictive of performance on similar problems in a summative assessment. Successfully completing these low stakes questions without guessing or needing excessive numbers of attempts indicates that a student will be able to apply similar concepts in an exam environment.

IV. Conclusion

There is a weak but statistically significant correlation between performance on online exercises and final exam performance, showing that there is promise in using automated online assignments in predicting exam results. Spacco et al. [3] show that statistically significant relationships existed between participation in online assignments and exam performance, and we have identified that much stronger correlations – on the order of those discovered in data from larger assignments – can be found by focusing on relative performance and selecting coding exercises with a similar difficulty level to the summative assessments. Instructors may be able to assign such problems in a course and then apply similar analysis techniques while the course is ongoing to identify students at risk of failing the exam.

References

[1] M. C. Jadud, "A First Look at Novice Compilation Behaviour Using BlueJ," Computer Science Education, vol. 15, 2005.

[2] C. Watson, F. W. Li and J. L. Godwin, "No Tests Required: Comparing Traditional and Dynamic Predictors of Programming Success," Proceedings of the 45th ACM technical symposium on Computer science education, 2014.

[3] A. S. Carter, C. D. Hundhausen and O. Adesope, "The Normalized Programming State Model: Predicting Student Performance in Computing Courses Based on Programming Behavior," Proceedings of the eleventh annual International Conference on International Computing Education Research, 2015.

[4] J. Spacco, P. Denny, B. Richards, D. Babcock, D. Hovemeyer and J. Moscola, "Analyzing Student Work Patterns Using Programming Exercise Data," Proceedings of the 46th ACM Technical Symposium on Computer Science Education, 2015.